.jpg)

Journey Through the Learner//Meets//Future Challenge: Part One

Solve shares trends and learnings from open innovation challenge that identified AI-enabled tools to improve assessment for Pre-K - Grade 8 learners in the United States.

In March 2024, Solve launched a new challenge funded by the Bill & Melinda Gates Foundation to find ideas and tools that improve educational assessments for young learners in the United States. The Learner//Meets//Future: AI-Enabled Assessments Challenge asked innovators everywhere: How can we use AI to make assessments more personalized, engaging, and predictive for Pre-K to Grade 8 learners in the United States?

This challenge responded to both the excitement and skepticism surrounding AI in education. It centered around the incredible opportunity to innovate assessment practices in US classrooms to better meet the diverse needs of learners, while also recognizing that there are serious ethical and equity concerns that technology designers, policymakers, and education leaders must consider. The challenge called for solutions that analyze complex cognitive domains, provide highly personalized feedback to learners, teachers, and caregivers, and/or enhance student engagement throughout the process.

“Ultimately, assessment can serve a really important function: shining a light on where people are and are not getting opportunities to learn, and where we can continue to work to level the playing field,” said challenge judge Kristen DiCerbo, Chief Learning Officer at Khan Academy. “But there is common agreement that we should be finding ways to do that better. Now we are looking for opportunities to use new technology to help us solve some of the problems we’ve experienced.”

What did we learn? Between March and May 2024, 84 solutions applied to the challenge. Solutions focused on a range of areas: from literacy and math skills, to measuring social-emotional learning, to assessing performance and growth in specific subjects like science, digital literacy, and language learning. One winning solution, Chat2Learn AI Suite, promotes and assesses a novel measure for young children: curiosity.

Given the early, but rapidly evolving landscape of AI in education, trends among the applicant pool largely aligned to expectations. The majority of applicants were at the concept or prototype stage (63%), with another 23% selecting pilot as their stage of development. Solutions skewed towards serving older learners, with 58% of applications addressing the needs of learners in Grades 3-8. 13% of submissions were designed for children and their caregivers/educators at the Pre-K and Kindergarten level, demonstrating that AI-enabled assessment interventions in early learning is a small but growing field.

The dimension of the challenge that most applications targeted was solutions that provide continuous feedback that is more personalized to learners and teachers, while highlighting both strengths and areas for growth based on individual learner profiles, suggesting that personalization is a key focus for AI assessment tools.

“Teachers and educators want to personalize learning and assessments. They know it’s the most effective way, but not every teacher has the time and capacity to do it for all students,” said challenge judge Joseph South, Chief Innovation Officer at the International Society for Technology in Education (ISTE) and the Association for Supervision and Curriculum Development (ASCD). “A lot of teachers are forced to be generalists because they need to cover so many students and topics.”

Solutions addressing student engagement (32% of submissions) and analysis of complex cognitive domains (25% of submissions) were also common, further showing that tools have multiple goals for improving learning experiences when it comes to assessment.

Reading, writing, and math are the skill sets that most proposed solutions targeted. Five of the winning solutions focused on literacy: DeeperLearning thru LLM/ML/NLP, Frankenstories & Writelike, LiteraSee by CENTURY Tech, WriteReader AI Assessment, and Quill.org, with a math-focused solution, ASSISTments: QuickCommentsLive, rounding out the cohort.

More about the finalists and winners: After rigorous rounds of scoring and interviews from reviewers, technical vetters, and an expert panel of judges, 16 solutions were selected as finalists for the challenge. Scroll to the ‘Finalists’ section to learn more about their work.

When evaluating solutions, the challenge judges took great interest in the future impact on the education system as a whole, including how well the tool would meet the current needs of classrooms and districts across the country.

“We asked ourselves: What is the true impact for kids with this particular tool? Is it culturally responsive? Will it support marginalized communities? Is it really something that will fit into our country’s educational system in a big and scalable way?” said challenge judge Maria Hamdani, Head of Assessment and Strategic Partnerships at the Center for Measurement Justice.

Ultimately, judges selected seven winning solutions to share the $500,000 prize pool and participate in a six month support program designed to help them further develop and scale their work.

And the winners are…

ASSISTments: QuickCommentsLive - leveraging safe and equitable generative AI to provide real-time feedback on math thinking, building student confidence, and saving teachers time.

Chat2Learn AI Suite - an AI-enhanced conversation guide for teachers and parents that promotes and assesses early learners’ curiosity.

DeeperLearning thru LLM/ML/NLP - accelerating deeper student learning while identifying the specific proximal learning opportunities that teachers can target with tool-specified instructional interventions.

Frankenstories & Writelike - using LLMs to evaluate short-form student writing across genres.

LiteraSee by CENTURY Tech - an AI writing assessment that analyzes student writing, provides detailed feedback, and recommends personalized learning intervention.

WriteReader AI Assessment - enables K-5 students to become published authors while increasing the students' literacy skills with the help of AI feedback & assessment.

Quill.org - Quill Reading for Evidence is a formative assessment tool that delivers continuous feedback on open-ended writing to build argumentation skills.

Support program components like coaching, cohort meet-ups, and tailored workshops—focused on topics like creating and capturing value via different business models and go-to-market strategies–are designed to help winners advance their solutions through early 2025.

“The hope is that they inspire others to go out there and create similar tools or similar solutions, with the knowledge of what AI has done,” Hamdani said. “In some cases, things that seemed impossible are now possible.”

This article is the first of a two-part series that explores trends and learnings from the Learner//Meets//Future Challenge. Come back in early 2025 for an update on the seven winning solutions and how they’re evolving as they take part in the support program!

Tags:

- Learning

- Custom Challenges

Related articles

-

“Education is the one thing you can take with you.” A Q&A with Rudayna Abdo, Founder and CEO, Thaki

-

A LEAP in evidence-based innovation for education

How to address the need for evidence-based innovation in education by empowering researchers, social entrepreneurs and education organizations to work together.

-

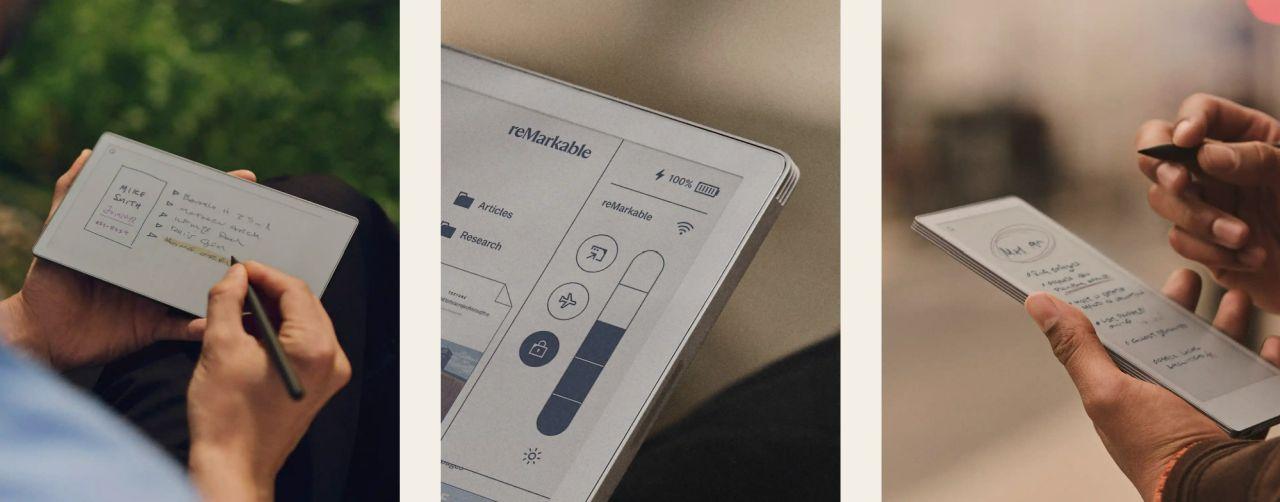

Powered by Purpose: E Ink’s ePaper Technology Takes Aim at the World’s Toughest Problems

Because it draws power only when an image changes—and none at all while static—ePaper reduces energy consumption by orders of magnitude. That single breakthrough unlocks net-zero transit signs, off-grid medical notebooks, and other applications that traditional screens simply can’t power sustainably.