How AI Helped Us Screen Applications in Half the Time

Never miss an update from our AI for Good series: sign up for the AI for Good newsletter.

In this AI for Good series, we’ve been exploring the growing operational challenges facing social sector funders. One big issue we’ve noticed across the industry is the influx of grant applications, driven by generative AI tools and shrinking government and multilateral funding.

Solve is no exception. This year, our Global Challenges drew 2,900 applications, our highest number to date. From that pool, we’ll select our 2025 Solver Class of around 30 innovators. You can join us at Solve Challenge Finals in September as we announce the class and celebrate their incredible work!

The Bottleneck: 2,900 Applications, 1 Small Team

A human takes around four minutes to screen each application. That’s 24 full working days for one person–a huge time investment for a lean, non-profit team, even when the work is split between several staff members.

That’s why we spent the last year working with researchers from Harvard Business School to build and test an AI-powered tool that could support our human screeners during the first stage of our multi-step review process.

At this stage, we filter out applications that are incomplete, ineligible, or not a strong fit for the challenge, whether they’re too early-stage, don’t use technology, or address a different problem than the one posed by the challenge. Our goal this past cycle was to cut screening time by 50% while maintaining the rigor of this initial review step.

From the start of this study, keeping humans in the loop has been a key principle. AI can help with efficiency, but human judgment brings the nuance, context, and diversity of thought that’s necessary to make the best selection decisions. Reviewing solutions at this first stage also keeps our staff abreast of the innovation landscape.

So we designed the tool to do two things:

Automatically screen out applications that had no realistic chance of proceeding.

Support our staff reviewers by providing, for each solution:

A passing probability: a score between 0 and 100 based on a rubric

An overall assessment: a “Pass,” “Fail,” or “Review” label based on the passing probability

A justification: the AI tool’s explanation of its recommendation

Faster, Smarter, but Still Human

We launched a working prototype, a Google CoLab notebook using the ChatGPT API, in March 2025. After many rounds of testing and tweaking, we felt confident that the tool’s decisions aligned closely with those of expert human screeners. Our top priority was to avoid false negatives and guarantee no strong solution was screened out prematurely.

When the 2025 application window closed with 2,901 solutions submitted, we were ready to roll. Here’s what happened:

43% of applications were labeled “Pass”

16% were labeled “Fail”

41% were flagged “Review” for human evaluation

The result? Our staff were able to focus on just the 41% labeled for review, cutting their total screening time from 24 days to just 10—while maintaining confidence in the quality of results.

Learning Alongside AI

Next up, we’re reviewing the tool’s performance to further refine the thresholds for the “Pass,” “Fail,” and “Review” labels. We’re also analyzing how passing probabilities correlate with later-stage scores, in hopes of strengthening the tool even more for future cycles.

We’ll keep sharing what we learn as we continue building tools that help us source the boldest, most promising innovations from around the world.

Tags:

- Innovation

- AI for Good

Related articles

-

Did AI Get It Right?

Reviewing screening outcomes for the 2025 Global Challenges

-

A Visionary Healthcare Innovator: Dr. Mohamed Aburawi on Tech, Healthcare, and Impact Investing

In the newest episode of The Solve Effect, Dr. Mohamed Aburawi shares how building in crisis can spark innovation that lasts.

-

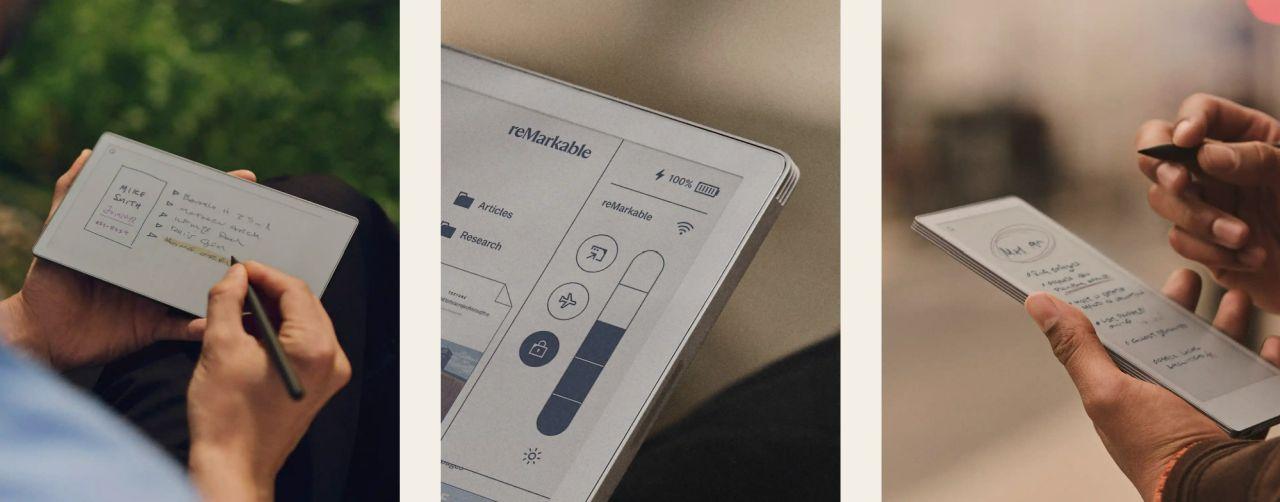

Powered by Purpose: E Ink’s ePaper Technology Takes Aim at the World’s Toughest Problems

Because it draws power only when an image changes—and none at all while static—ePaper reduces energy consumption by orders of magnitude. That single breakthrough unlocks net-zero transit signs, off-grid medical notebooks, and other applications that traditional screens simply can’t power sustainably.